Crop and weed segmentation

Weeds are a major agricultural problem affecting crop yield and quality, especially in large fields. Image recognition using deep learning technology can be applied accurately and efficiently to process large number of images acquired from drones, field-robots, etc. Thus, there is much demand to develop efficient weed detection systems using deep learning technology to prevent agricultural losses. In this tutorial, we show an example to detect/segment crops and weeds using Mask R-CNN[1] through JustDeepIt.

Dataset preparation

SugarBeets2016[2] dataset has 11,552 RGB images captured under the fields, and each image has a annotation for sugar beets and weeds. The dataset can be downloaded from the StachnissLab website. By clicking the Complete Dataset link on the StachnissLab website, the download page is displayed. Next, click on the annotations link and then the cropweed link to go to the detailed data download page. In this page, we download all 11 files named as ijrr_sugarbeets_2016_annotations.part*.rar where * is digits from 01 to 11.

After downloading the 11 files, we decompress them with the corresponding software.

Then, ijrr_sugarbeets_2016_annotations folder is generated after the decompression

and this folder contains CRA_16.... folders which contains images and annotations (RBG mask).

Next, we randomly selected 5,000 and 1,000 images

and save them into train and test folders for training and test, respectively.

In addition, since JustDeepIt requires annotations in the COCO format,

we need convert the RGB masks into a COCO format file (train.json).

Python script convert_rgbmask2coco.py stored in GitHub

(JustDeepIt/tutorials/SugarWeeds2016/scripts)

can be used for random sampling and format conversion at the same time.

In addition, JustDeepIt requires a text file containing class names.

We create a file class_label.txt containing

“sugarbeets” on the first line and “weeds” on the second line,

as the SugarBeets2016 dataset has two classes, namely, sugar beets and weeds.

The above dataset preparation can be performed manually or automatically using the following shell scripts:

# download tutorials/SugarBeets2016 or clone JustDeepIt repository from https://github.com/biunit/JustDeepIt

git clone https://github.com/biunit/JustDeepIt

cd JustDeepIt/tutorials/SugarBeets2016

# download image data

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part01.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part02.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part03.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part04.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part05.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part06.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part07.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part08.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part09.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part10.rar

wget https://www.ipb.uni-bonn.de/datasets_IJRR2017/annotations/cropweed/ijrr_sugarbeets_2016_annotations.part11.rar

# decompress data

unrar x -e ijrr_sugarbeets_2016_annotations.part01.rar

# generate training and test images and annotation in COCO format

python scripts/convert_rgbmask2coco.py ijrr_sugarbeets_2016_annotations .

Settings

To start JustDeepIt, we open the terminal and run the following command. Then, we open the web browser, access to http://127.0.0.1:8000, and start “Instance Segmentation” mode.

justdeepit

# INFO:uvicorn.error:Started server process [61]

# INFO:uvicorn.error:Waiting for application startup.

# INFO:uvicorn.error:Application startup complete.

# INFO:uvicorn.error:Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

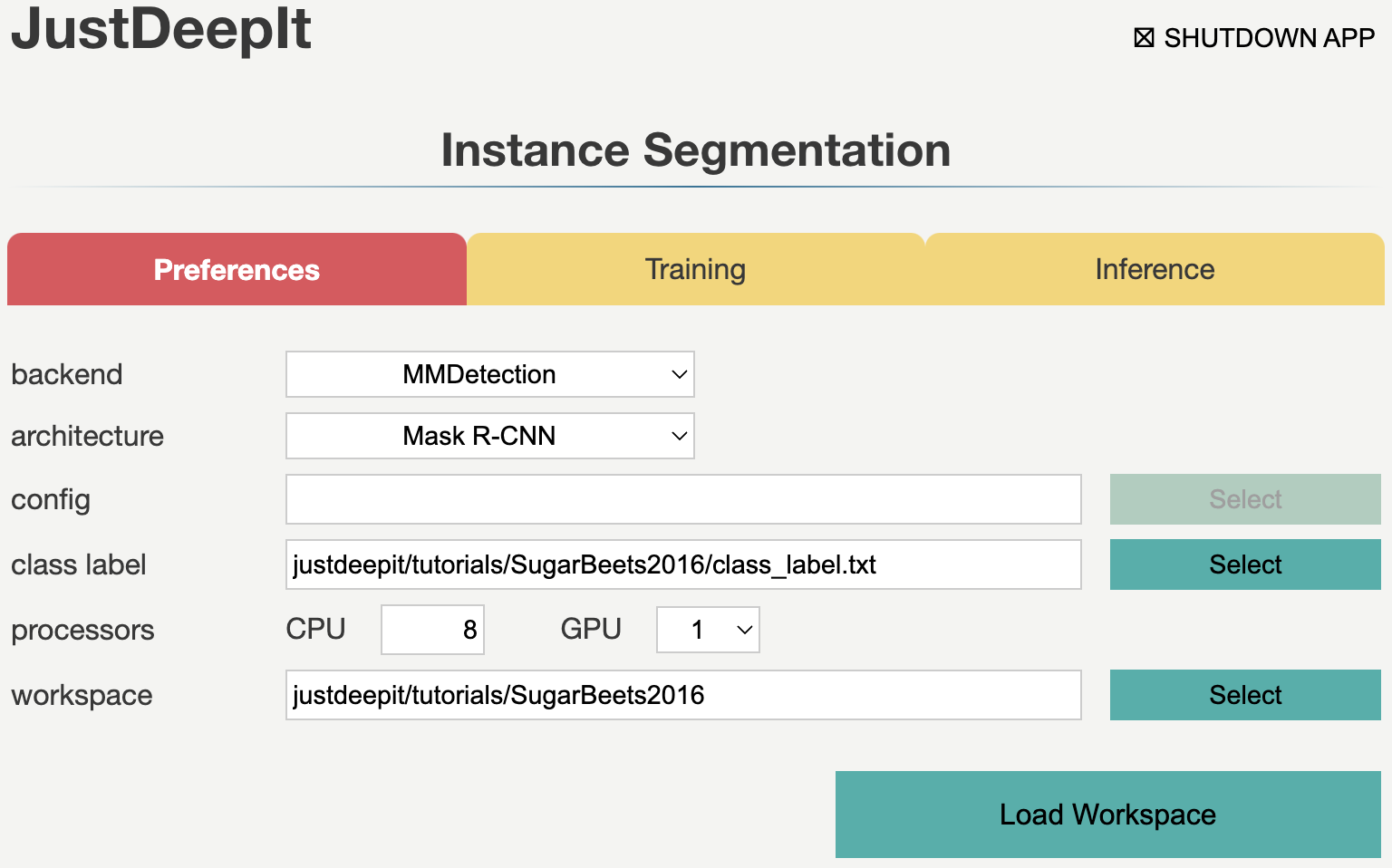

We set the architecture to Mask R-CNN,

the workspace to the location containing folder train and file train.json,

and the other parameters as shown in the screenshot below.

Note that the value of workspace may be different from the screenshot

depending on user’s environment.

Then, we press button Load Workspace.

Once the workspace is set, the functions of Training and Inference become available.

Training

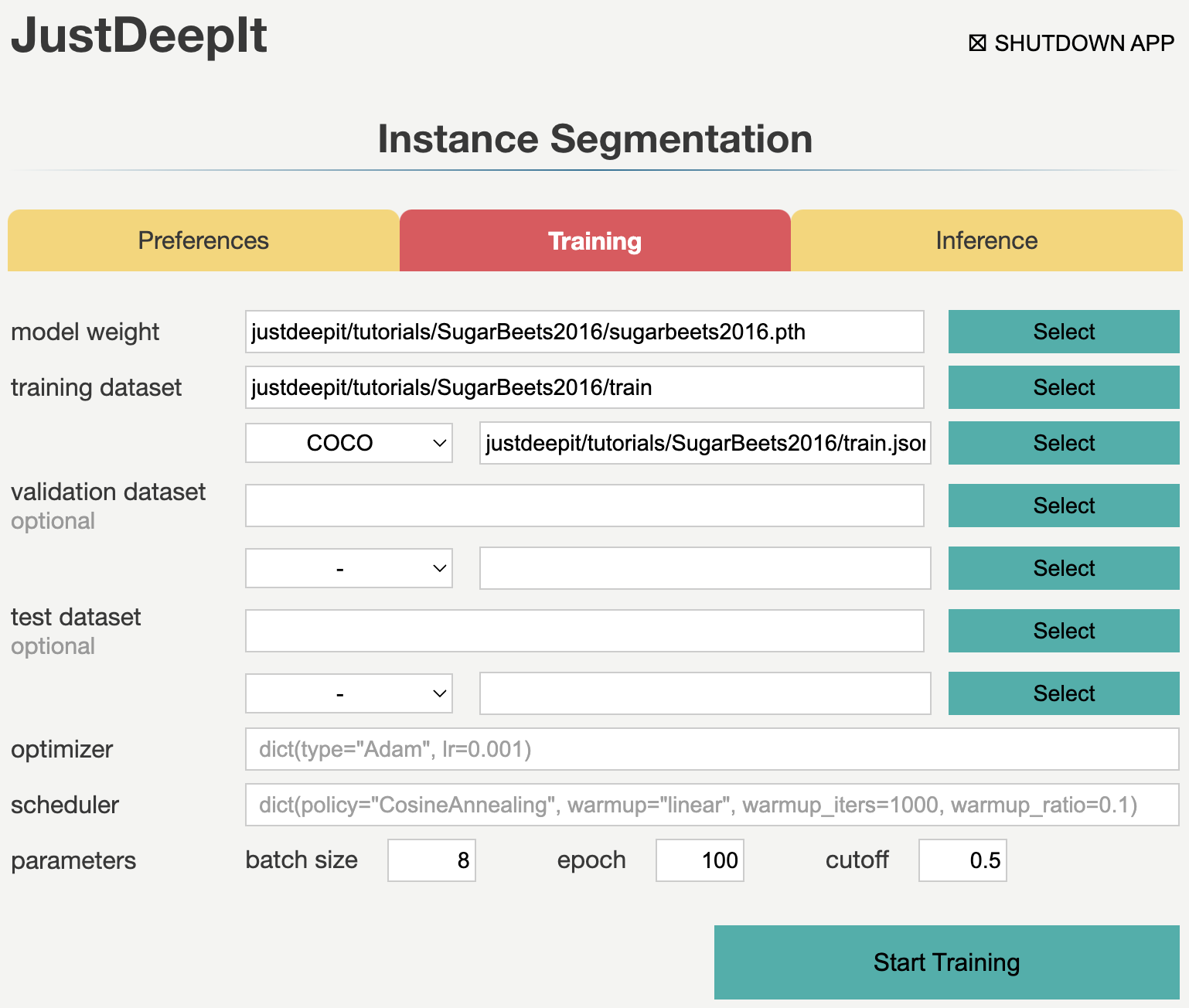

To train the model,

we select tab Training

and specify the model weight as the location storing the training weights,

image folder as the folder containing training images (i.e., train),

annotation format as the format of the annotation file (COCO in this case),

and annotation as the file of image annotations (i.e., train.json).

The other parameters are set as shown in screenshot below.

Note that the values of model weight, image folder, and annotation may be

different from the screenshot depending on user’s environment.

Then, we press button Start Training for model training.

Training takes about 20 hours, and it depends on the computer hardware.

Inference

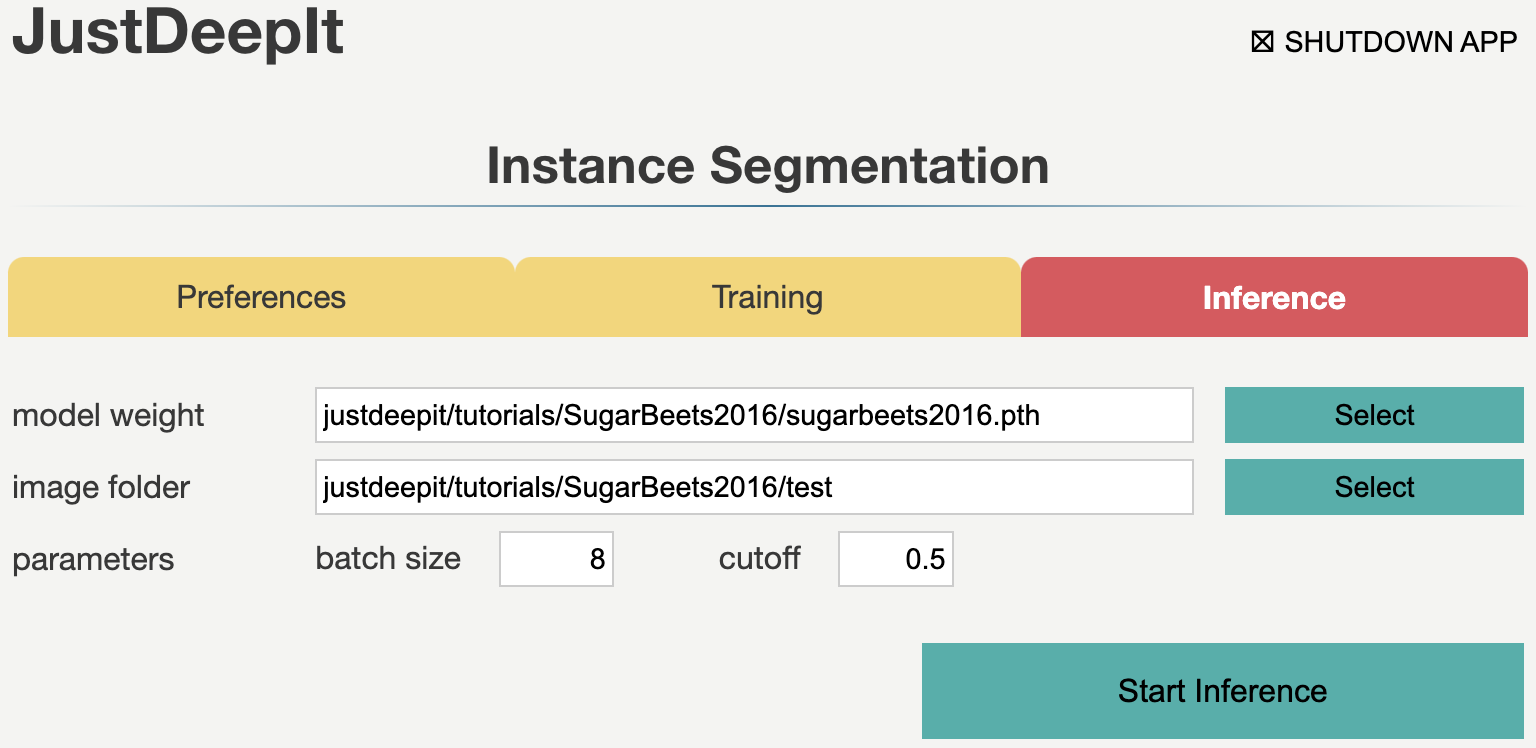

In tab Inference, the model weight is specified to the training weights,

whose file extension is .pth in general.

Then, we specify image folder to the folder containing the images for detection

(i.e., test),

and other parameters as shown in screenshot below.

Note that the values of model weight and image folder may be

different from the screenshot depending on user’s environment.

Next, we press button Start Inference for object detection.

The detection results will be stored in folder justdeepitws/outputs of the workspace

as images with bounding boxes and contours

and a JSON file in the COCO format (annotation.json).

Examples of wheat head detection results are shown in the figure below.

API

Model training and object detection can be performed using the JustDeepIt API.

Python script run_justdeepit.py stored in GitHub

(JustDeepIt/tutorials/SugarBeets2016/scripts) can be used for this purpose.

cd JustDeepIt/tutorials/SugarBeets2016

# run instance segmentation with Detectron2 backend

python run_justdeepit.py train

python run_justdeepit.py test

# run instance segmentation with MMDetection backend

python scripts/run_justdeepit.py train mmdetection

python scripts/run_justdeepit.py test mmdetection